There are several reasons for Google to downrank a website from their search results. My first experience with downranking was on my very first day at a job in 2011. The day I walked into the building, Google released their first Panda update. My new employer, being a "content creator," disappeared from search results. This was a multi-million dollar company that had teams of writers and a portfolio of websites. They depended on Google, and not appearing in search meant we went on code red that first day.

But it's not just large companies. Just this year, as AI Overview has dominated the search page, I've seen traffic to this blog falter. At one point, the number of impressions was increasing, yet the number of clicks declined. I mostly blamed it on AI Overview, but it didn't take long before impressions also dropped. It wasn't such a big deal to me since the majority of my readers now come through RSS.

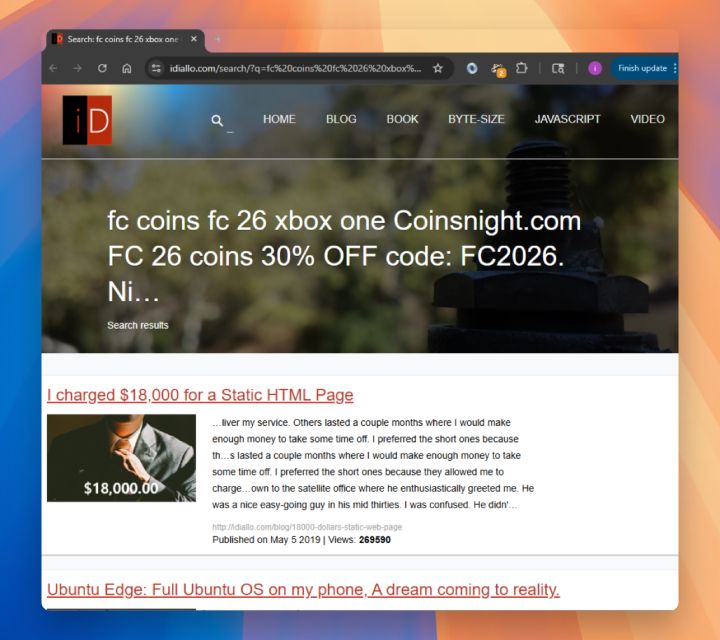

Looking through my server logs, I noticed that web crawlers had been accessing my search page at an alarming rate. And the search terms were text promoting spammy websites: crypto, gambling, and even some phishing sites. That seemed odd to me. What's the point of searching for those terms on my website if it's not going to return anything?

In fact, there was a bug on my search page. If you entered Unicode characters, the page returned a 500 error. I don't like errors, so I decided to fix it. You can now search for Unicode on my search page. Yay!

But it didn't take long for traffic to my website to drop even further. I didn't immediately make the connection, I continued to blame AI Overview. That was until I saw the burst of bot traffic to the search page. What I didn't take into account was that now that my search page was working, when you entered a spammy search term, it was prominently displayed on the page and in the page title.

What I failed to see was that this was a vector for spammers to post links to my website. Even if those weren't actual anchor tags on the page, they were still URLs to spam websites. Looking through my logs, I can trace the sharp decline of traffic to this blog back to when I fixed the search page by adding support for Unicode.

I didn't want to delete my search page, even though it primarily serves me for finding old posts. Instead, I added a single meta tag to fix the issue:

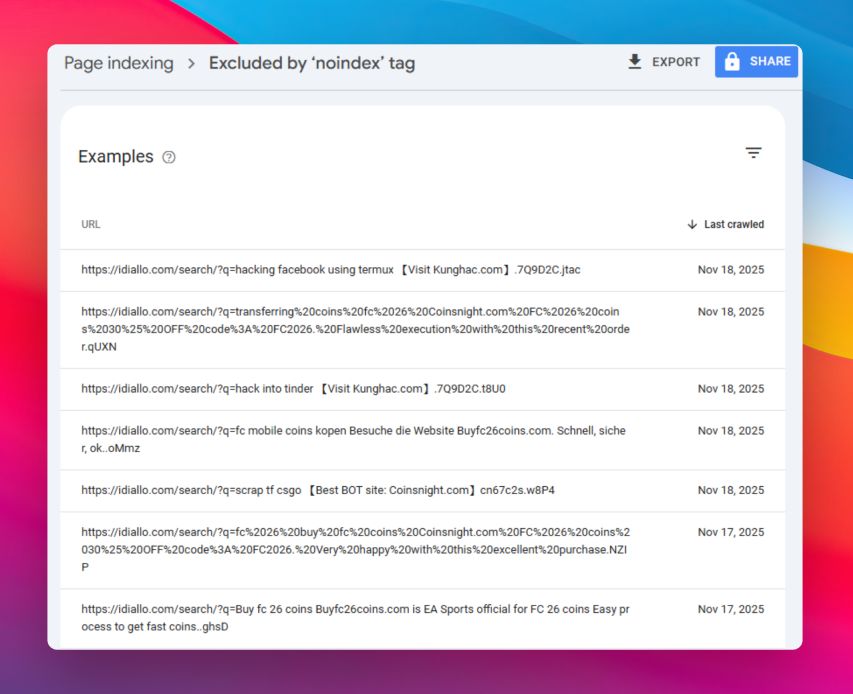

<meta name="robots" content="noindex" />

What this means is that crawlers, like Google's indexing crawler, will not index the search page. Since the page is not indexed, the spammy content will not be used as part of the website's ranking.

The result is that traffic has started to pick up once more. Now, I cannot say with complete certainty that this was the problem and solution to the traffic change. I don't have data from Google. However, I can see the direct effect, and I can see through Google Search Console that the spammy search pages are being added to the "no index" issues section.

If you are experiencing something similar with your blog, it's worth taking a look through your logs, specifically search pages, to see if spammy content is being indirectly added.

I started my career watching a content empire crumble under Google's algorithm changes, and here I am years later, accidentally turning my own blog into a spam vector while trying to improve it. The tools and tactics may have evolved, but something never changes. Google's search rankings are a delicate ecosystem, and even well-intentioned changes can have serious consequences.

I often read about bloggers that never look past the content they write. Meaning, they don't care if you read it or not. But the problem comes when someone else takes advantage of your website's flaws. If you want to maintain control over your website, you have to monitor your traffic patterns and investigate anomalies.

AI Overviews is most likely responsible for the original traffic drop, and I don't have much control over that. But it was also a convenient scape goat to blame everything on and excuse not looking deeper. I'm glad at least that my fix was something simple that anyone can implement.

Comments(2)

Matthew Brunelle :

Similarly, I recreated my Jekyll blog as a Ghost blog, but handled the transition poorly. It looked similar to when a site goes down and then is bought to make a link farm. Now I'm in Google purgatory and totally de-indexed. And I really don't want to play the SEO game.

Good news is I get a pretty steady stream of people from DDG, Kagi and Bing.

Ibrahim author :

At this point @Matthew the only SEO game I'm willing to play is the technical one. As in making sure links work, redirect when needed, and point to the right place. In other words, users are the main target. Anything else is a game of cat and mouse.

Let's hear your thoughts