As a web developer, I am proud when asked about my work experience. I worked with a dozen programming languages and I can fairly quickly learn a new one. I use them both for work and privately. When I have new ideas, I open up vim, write code, test the idea locally. If it works I then quickly spin a digitalocean droplet to validate the idea with real people. All this just for fun. But from time to time, I am asked about Artificial Intelligence and my answer is not as enthusiastic.

I had programmed for more then a decade before I realized I didn't know the first thing about AI. When the world was talking about self-driving cars and chatbots, I dismissed it as a trend. But deep down, I was just ashamed to be a clueless web developer with no experience in AI. I wasn't really sure how AI was applied in a real world project.

What is AI? Is it a library? Do you add that library to your code and the application suddenly becomes intelligent? Or is it the number of if-and-elses that matters? How many of those do you add to your code before it is considered AI?

Reading Wikipedia alone introduced hundreds of new terms I was not familiar with. Things like neural networks, GANs, biases, disappearing gradients, and many more. After many opened tabs, going deeper in the rabbit whole, spinning for hours on end, I am back at the beginning with more questions than answers. What is AI?

I'm a hands-on kind of guy. The only way for me to learn is by building. I set out to build a small project using the simplest form of machine learning, a subset of AI, and it triggered a new understanding in me. Now I can finally navigate in the world of AI. I'll write a separate article about Project Jaziel soon. But for now, if you are a web developer and like me, you often hide in shame when artificial intelligence is mentioned, then this article is for you.

So, what is AI?

AI has a long history that even predates modern computers. I will leave it to Yann LeCun to give you an overview (27 min video). Note that you will often hear his name in AI literature. The gist of it is that AI is a problem solving technique loosely based on our understanding of the smallest units of the human brain: the neuron. There are two types of computer intelligence.

One is Artificial General Intelligence or AGI. This is an agent that can reasonably perform general, yet complex tasks. For example, it can keep up with a conversation, play a game, walk, recognize any type of object, answer emails, and it can do all of this at the same time. Think of it as Hal 9000, Samantha in the movie Her, or Data in Star Trek, or my favorite TARS in Interstellar. They can be drop-in replacement for a human. This is the de facto standard in science fiction, but we are nowhere near building something like this in real life.

[...] AGI is an exciting goal for researchers to work on. But it'll take technological breakthroughs before we get there and it may be decades or hundreds of years or even thousands of years away. — Andrew Ng. (Ai for Everyone Deeplearning.ai)

This contrasts the usual 5 to 10 years we often hear in press releases.

The second type of computer intelligence is Artificial Narrow Intelligence or ANI. ANI is so popular that it is just referred to as AI. This is when the intelligence is specialized in a narrow field. Like an AI that can classify pictures of dogs and cats, transcribe audio, or that can translate text. It specializes in that one field and one field only. Any insight learned by the machine is not transferable to another field.

The most popular subset of ANI is machine learning. And the AI revolution we are experiencing today is part of a subset of machine learning called neural networks. Whether it's self-driving cars or chatbots, they are all powered by machine learning. That's what I would like to focus on today.

Terms to be familiar with and Math.

You can build an AI project using popular frameworks like Tensorflow or Pytorch without ever understanding how they work internally. I did just that but then I had a hard time building a second project. Underneath these powerful frameworks is some Math and some relatively simple concepts. Here I will present some terms, by no means a full definition. But I encourage you to research each term independently once you are done reading the article.

Linear regression is the attempt to find a relationship between an input and an output. Mathematically represented as y = w * x + b, where x is the input and y is the output.

Logistic regression, for the sake of this article, is similar to the linear regression. Only the result of the equation is a probability that exists between 1 and 0.

Weight is the importance or significance of an input in relation to an output. The larger the weight, the more the input has an impact on the output.

Bias is the threshold an artificial neuron has to get over in order to activate.

Sigmoid function is a function that squashes the results of an equations to a range between 0 and 1. The bigger the input, the closer to 1 the result is. The more negative the input is, the closer to 0 the result will be.

Loss function is used to calculate how far we are from a desirable value. The result helps us determine at which rate we need to adjust the weight (w) and bias (b) of our linear function. Ex: Cross-entropy loss function

So, machine learning.

Think of machine learning as a shortcut. It's a tool that can figure out the details of a problem for us. Let's take a look at Speech recognition. An old technology that existed since the 60s. By the 80s, a revolutionary method using N-grams took over the industry. In order to transcribe speech to text, engineers broke down audio into the smallest possible workable units. The units of sound would pass through multiple layers, at each stage an insight would be extracted using a specific algorithm.

For example, a recorded audio is broken down into audio samples, let say at 10 millisecond intervals. Here the first algorithm will try to clean up the sample to remove known artifacts then calculate a spectral band. When multiple samples are combined, a pattern of speech can be detected. A second algorithm tries to extract those patterns into units called phones, the smallest units of speech. Phones are combined then filtered through another algorithm that finally converts them into phonemes in a specific language. The next algorithm can combine phonemes in various ways to convert them to syllables. Syllables can be translated to alphabetical letters and matched against dictionary words.

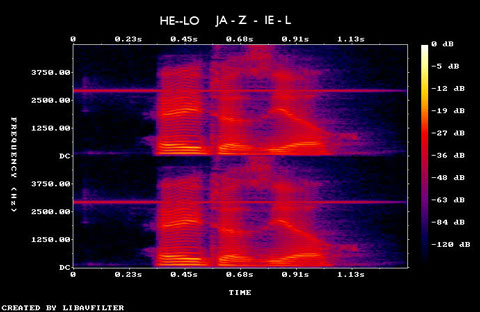

“Hello Jaziel” can be broken down into

Audio Sample → Spectral Band → phones → phonemes → syllables → words

Extracting each step required a different specialization to detect patterns. Engineers have studied each step to create an algorithm that can recognize the sound pattern regardless of accent, background noise, or distortion. It was a painstaking process and accurate only in the most perfect conditions. These devices wouldn't work on me, I have a non traditional English accent.

With modern AI or Neural Networks to be more specific, we skip all the steps in between. The model is presented with an audio sample and the resulting words or phrases. The neural networks then figures out the patterns all on its own. There is no need to extract phones, phonemes or any patterns. The complexity is learned automatically from the voice data. It's nothing short of fascinating.

But how does the machine figure it out? What does the code do? What's in the black box of neural networks? Let's take a look at a problem that has much fewer layers of complexity.

Good deal? Bad Deal?

Let's say I am in the market for a house. I have a well garnished bank account and I don't know the first thing about house prices. I call one of those real estate agents who advertise on the bus bench and the next thing you know, we are shopping. He shows me a list of houses and their prices.

The first house is 1,000 square feet and cost $200,000. How do I get to know if this is a good deal? Since I haven't bought a house before, I have no idea. So the trusted agent tells me, "Yes, this is a great deal"

He shows me another house. This one is 1200 ft2 and cost $300,000. "This one," he says, "is a bad deal." When he shows me the next house, I can kinda guess if it is a good or bad deal.

| # | Size | Price | Deal |

|---|---|---|---|

| 1 | 1,000 | $200,000 | Good |

| 2 | 1,200 | $300,000 | Bad |

| 3 | 900 | $200,000 | Good |

In my head, I can create a relationship between the set of numbers. I use the first house as reference for good price. The second house grows by 200 ft2 , but the price jumps a whole $100,000. Since the agent says this is not a good deal, I'll assume that the jump for each square foot added should be much smaller. For the third house, the square footage went down but the price remained the same. This convinces me that the first house was really a good deal.

Show me a house that is 800 square feet with a price of $300k and I can tell you it's a bad deal with confidence. I can come up with an formula that takes the price and divides it by the size, then add a threshold of 225 dollars/ft2. Anything above 225 is a bad deal.

Looking at just 3 example, a person can learn to determine whether a house price is good or bad. Our goal is to teach a machine to do just that, without giving it a specific algorithm. The most popular type of machine learning algorithm these days is Deep Learning or artificial neural networks.

The technique was conceived by trying to replicate the way brain cells or neurons compute information. Although the algorithms themselves differ from the way real neurons work, the analogy is still used to this day.

Let's first illustrate how we can teach our software to be intelligent. Unlike what I thought before, there isn't a library you can add to your code and it suddenly makes it intelligent. There are tools and frameworks that exist to help build your neural network, but the core of it is just a few algorithms. One of the simplest of these algorithms you can use to teach a machine is called logistic regression.

Single layer neural network or logistic regression.

Let's create a simple function, that takes 3 arguments. Let's call it train()

function train(feature1, feature2, expected_result) {...}

What this function does is take the square footage of a house, its price then tries to fit it in the expected result. For example I can give it 1000ft2, $200,000 and 1. Good and bad can be represented as 1 and 0 respectively. Then, you do Math. There is rarely a time when a web developer has to do some math. But luckily the math here is very simple. We use linear regression.

z = (w * x) + b

X is the feature value, like square footage. W is the weight. We use the weight to determine how much importance to put on a feature. B is for bias. We add a bias in the end to skew the result above or below a threshold. Before we start training, we can initialize W and B to some random values. Traditionally, the values are set to zero in a logistic regression network.

Because the result of our equation is supposed to yield a 1 or a 0, good or bad, we use a sigmoid function to constrain the result to a value ranging between 1 and 0. This makes our algorithm a logistic regression.

A = sigmoid(z)

Now if the result is a large number, A will be equal or close to 1. If the number is small or negative, A will get closer to zero. Now let's test it out with our first example. We use x1 and x2 since we have two features and each will have its own weight w1 and w2. This will be our forward pass.

// normalizing the values to get smaller numbers

x1 = feature1 / 1000

x2 = feature2 / 100000

// initialize w and b to zero

w1 = 0 // weight of the first feature

w2 = 0 // weight of the second feature

b = 0

// linear regression formula

z = (w1 * x1 + w2 * x2) + b

// sigmoid to squash the numbers to between 0 and 1

A = sigmoid(z)

If we replace the numbers with our first example, since the W1 and W2 are 0, then Z = 0:

A = sigmoid(z)

// sigmoid of zero is 0.5

Once we have this value or Activation, we can compare it to the expected result. We use a loss function to determine how far we were from the expected result. There are many types of loss functions, in our case we will use a cross-entropy loss function. For the first example, the expected answer was 1:

y = expected_result

// Cross-entropy loss function

// note that only one side is active at a time. If y is one, right side of

// the plus will be (1 - 1) and will equal zero. If y is zero, the left side

// will be zero.

loss = y * log(A) + (1 - y) * log(1 - A))

// -0.6931471805599453

Looks like we are far from the expected result. What we want to do is go back to our equations, and update the values of W and B just slightly until the number -0.69 gets closer to 0. The math involved is not complicated, but it is out of scope of this article. This process is called back propagation (backward pass). We can summarize it with these simple formulas:

// derivative of W or dw

dw1 = x1 * (A - y)

dw2 = x2 * (A - y)

// derivative of b or db

db = A - y

In order to find the correct values for W so that it will yield 1 after a sigmoid squash, we will update W with tiny steps (alpha) then repeat the linear equations one more time.

// alpha is the learning rate. Usually a small fractional number.

alpha = 0.01

// update w and b

w1 = w1 - alpha * dw1

w2 = w2 - alpha * dw2

b = b - alpha * db

We can run the whole thing in a for loop doing a forward pass, calculating the loss, then doing a backward pass and updating the values of W and B. We repeat the process until the loss is as close to zero as possible. Finding the optimal value for W and B is what training the model means.

In the real world, we won't just train it on one example, that would only teach it to work for our specific example. We would train it on thousands or hundreds of thousands of examples and use a cost function (the average of all losses). I trained a small model on dozens of examples, and I couldn't get it to perform better than 60% accuracy. That's still better than a coin toss. But with more data, the better the algorithm will perform.

function predict(sqft, price, W, b){...}

predict(2000, 250000, w, b)

// prints 1 or good deal

In a nutshell, this is AI, or machine learning, or neural networks, or logistic regression to be more precise. We can use it as a small application where you can enter two parameters, and it can give you a fairly accurate response. Looking at the code that powers it, it hardly looks intelligent.

Logistic regression is the simplest form of a neural network. We use the features, or characteristics of an agent, to try to compute a 1 or 0 with it. But this is not the only way. I've generated, hundreds of more examples of house prices, then classified them with the same algorithm. It was still stuck at %60 accuracy. I switched my neural network to use 1 hidden layer or turned it into a shallow neural network, and the accuracy quickly jumped to 98%.

Shallow (2 layer) neural network.

Now imagine instead of using the price or square footage, you replace your X with all the pixel values of an image. You can now use it to classify pictures.

Modify the structure of the neural network, and you end up with a convolutional network. Here, you can efficiently detect faces, cats, objects on a picture. Modify the structure some more and you have a recurrent neural network. There you can use it for speech to text or generate text like GPT-3.

All of this using relatively simple math, and the same formulas I've provided above. If we have to pinpoint one part of these equations that gives our code intelligence, it will be back propagation. This is where the machine goes back one step and updates the values of W and B to find new values that will make the equation more correct. Do this multiple times at the speed of a modern computer, and you got yourself an AI.

This is barely scratching the surface. But it should be enough to give you a good starting point for learning AI.

I found some pretty good resources that helped me understand some concepts. Below are links to these resources.

- Neural Networks Series by 3Blue1Brown

- Intro to Deep Learning

- Neural Network and Deep Learning by Michael Nielsen (free book)

Comments

There are no comments added yet.

Let's hear your thoughts