Listen to the audio version

0:00

0:00

For over two decades, I’ve worked as a software developer. At some point along the way, writing JavaScript stopped being something I had to think about, it just happened. Building CRUD apps, managing forms, handling the DOM, these became second nature. I could step into almost any project and instantly start wiring things up. This is what Daniel Kahneman refers to as System 1 thinking: fast, intuitive, automatic.

But that ease didn’t come for free. It was built through years of deliberate effort, of debugging endless edge cases, of reading through documentation line by line. That’s System 2: slow, analytical, and effortful. It’s what you use when you’re learning something new, when you don’t yet have mental shortcuts to fall back on.

As developers, we constantly shift between these two modes. The longer we work in the field, the more we rely on System 1. Until the ground shifts beneath our feet.

I remember the first time I used Angular 1.0. Coming from the jQuery world, it felt completely foreign. Suddenly, my deep, intuitive knowledge meant little. I had to slow down, re-learn, and question my assumptions. But over time, as I built more Angular apps, it too became second nature. System 2 became System 1.

And then Angular 2.0 dropped. The whole model changed. It wasn’t just an update, it was a paradigm shift. Everything I had internalized had to be re-evaluated.

Now it’s React, Vue, or Svelte. Each framework comes with its own philosophy, patterns, and quirks. When I start working with one, I’m back to Googling everything I type. I’m in System 2 again. But I know the pattern. I’ve been here before. Slowly, it becomes intuitive.

But just as I settle into this new rhythm, AI enters the scene. Not as a new tool, but as a paradigm shift. One that doesn’t just require learning. It questions whether we need to learn at all.

Today, AI can scaffold entire applications, write components, even solve obscure bugs. With tools like GitHub Copilot and ChatGPT, it’s easy to get by without deeply understanding the code you’re deploying. At first, this feels empowering. But there’s a hidden cost.

To understand that cost, think about riding a horse. For centuries, humans relied on horses for transportation. It was a skill everyone needed. Then the automobile came along and replaced the horse. We didn’t lose much, because driving still required some form of System 2 engagement. We had to learn, we had to adapt.

But what happens when the car drives itself?

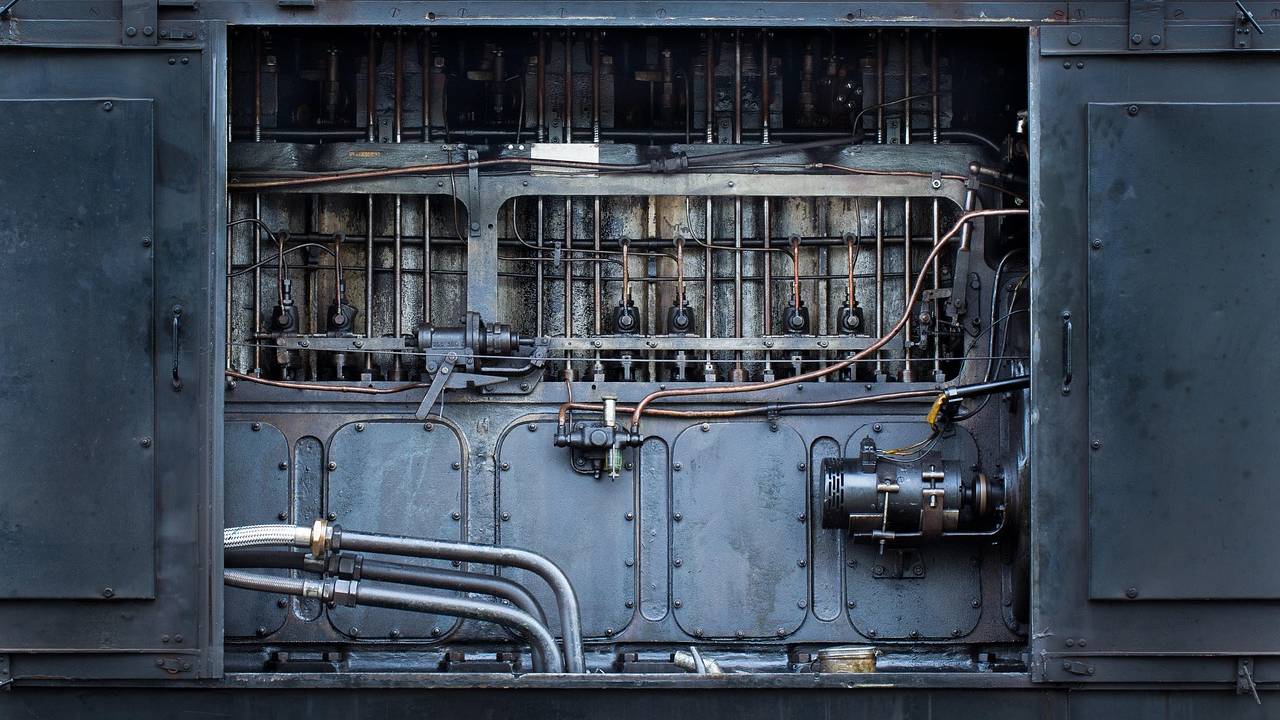

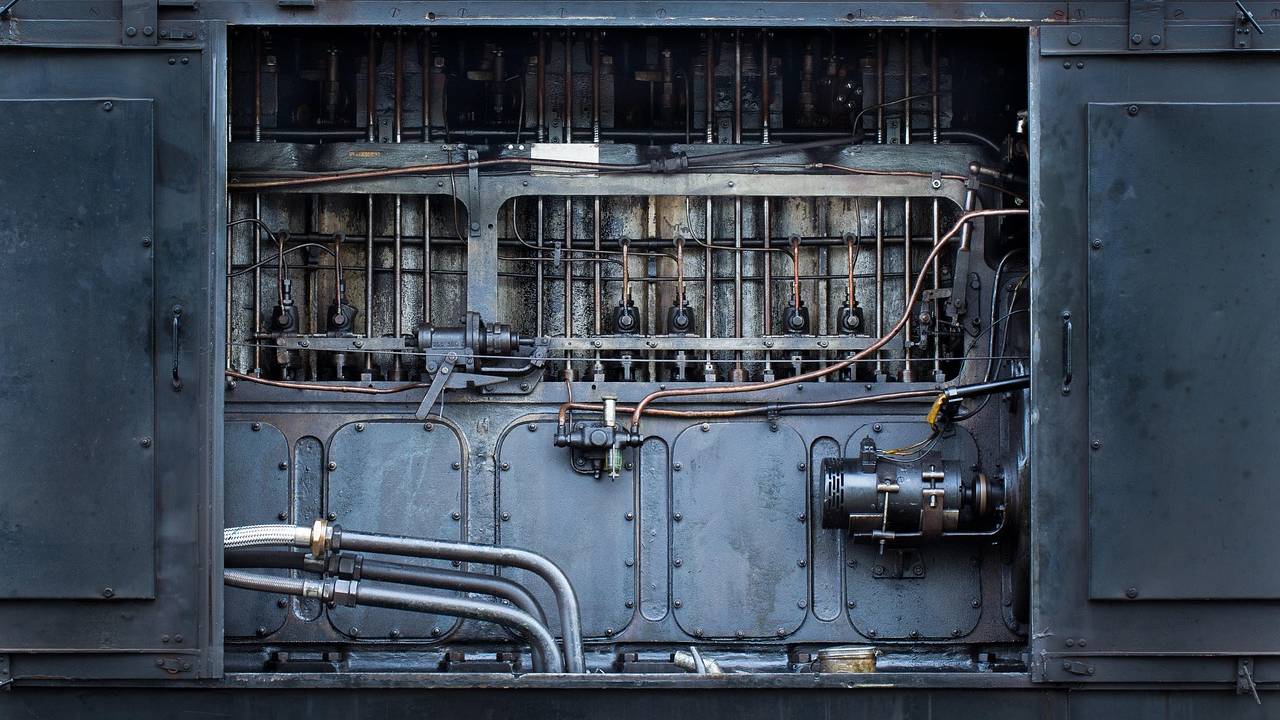

In E.M. Forster’s short story The Machine Stops, he paints a future where a vast machine handles every aspect of human life. People live isolated lives, fully dependent on the machine. They don’t know how it works. They only know how to ask it for things. When the machine breaks down, society collapses. No one remembers how to fix it.

This is the danger of bypassing both System 1 and System 2. If we no longer struggle to learn (System 2), and no longer build intuition (System 1), we become entirely dependent on tools we don’t understand. We trade capability for convenience.

In my personal work, I avoid chasing trends. I choose tools that are battle-tested. Tools I can reason about. Sure, at work, I use whatever the job demands. But when I build for myself, I want to understand every line.

Learning to learn is a noble idea. But more important is learning to unlearn, and knowing when to resist the comfort of automation. Because one day, when the machine stops, we’ll need to remember how to think.